Improve stat queries and ReceiverWorker logic #862

No reviewers

Labels

No labels

approved, awaiting change

bug

configuration

documentation

duplicate

enhancement

extremely low priority

feature request

Fix it yourself

help wanted

invalid

mastodon_api

needs docs

needs tests

not a bug

planned

pleroma_api

privacy

question

static_fe

triage

wontfix

No milestone

No project

No assignees

2 participants

Notifications

Due date

No due date set.

Dependencies

No dependencies set.

Reference: AkkomaGang/akkoma#862

Loading…

Reference in a new issue

No description provided.

Delete branch "Oneric/akkoma:perf_tweaks_stats+jobs"

Deleting a branch is permanent. Although the deleted branch may continue to exist for a short time before it actually gets removed, it CANNOT be undone in most cases. Continue?

This is a batch of patches primarily aimed at improving perf, but also cleaning up some other stuff along the way.

I’ve been gradually applying this on my instance clearing up metrics such that job exceptions are now actually meaningful and more importantly yielding a significant reduction in jobs with a noticeable database load reduction. Addresses part of #784.

The relative difference will probably differ for larger instances, but considering the two stat queries caused a considerable chunk of total db time even for ihba and akko.wtf in #784, i expect it to still be a measurable improvement.

I haven't seen anything odd in logs anymore, but while the major perf improvements have weeks of testing,

i also only finished the last tochups about two days ago, so it might be good to give this some more testing before merge(it’s been uneventful weeks by now); perferably also on more servers.The following allows viewing a filtered log with frequent but unrelated messages removed:

grep -Ev '^$|Can'\''t find LRDD template in|(Could not decode featured collection at fetch|Object rejected).*:(not_found|forbidden|mrf_reject_check|reject)' /var/log/akkoma | awk '/^[[0-9]{4}-[0-9]{2}-[0-9]{2} / {print "";} 1 {print;}' | less +GAs usual, the PR can and is best be reviewed commit by commit and each commit message gives more details.

The main improvements fall into the following categories:

ap_enabled; backported from Pleroma + fixupsThis isn’t directly a perf improvement, but about half of all suspicious log entries I investigated during testing turned out to be a pre-existing issue related to poor

ap_enabledhandling, so to save me time and annoyance this is included before the main patches here.Dropping the user fetch and limiting refetches, might very slightly improve perf though.

Before this a known-noop worker was created for each discovered post if db search is used; and NodeInfo refetcher jobs got frequently duplicated and also created when not needed.

Creating, queuing and cleaning up jobs comes with overhead and increases PostgreSQL MVCC-related table bloat.

Note: the job filtering mechanism here is differently implemented than in the 6-month-old #789 PR, the latter could adopt the more transparent version introduced here once both are merged.

Even though stat queries are only run once per 5min, they were ridiculously expensive and caused a well visible, regular spike in average query time. On one single user instance in #784 it even took up more than half of the total database time!

Previously e.g. Deletes of unknown objects failed the validator and always exhausted all retries and similar other things.

The Delete scenarios is quite common and when a remote user followed by a local user deleted most posts, this caused the by far biggest receiver worker job count (job failures) per minute the server experienced. But also in general there was a constant high background job failure rate from needlessly retried receiver jobs.

Ever since adding the final "gracefully ignore" bits, i didn’t encounter any "invalid AP" logs anymore so far.

Important disclaimers: this was actually production tested on something based off upstream commit

71d3bbd7bei.e. slightly behind current develop; pulling in commitschanging the db layout might have disturbed the relative perf diff stats.

Furthermore, more patches not in this PR were applied on top of the aforementioned commit, most notably in this context, PR #789 which severely lessens the impact of

the by far biggest offender in unpatched outlier stats (ref #784). Without #789 but with attachment cleanup enabled there likely won't be any noticeable change since the absurdly high cost of futile attachment cleanup attempts drowns out everything else.

Results:

Before the patch the average total db query time had well visible spikes every 5 minutes when the stat queries got refreshed:

The average exec time of the remote and local user count query (both averaged together) was 18000µs per query; after the patches the remaining local user count queries only take 67µs on average and the remote estimate is quite fast too. The regular spikes are gone:

No image for this, but similarly the processing cost from the

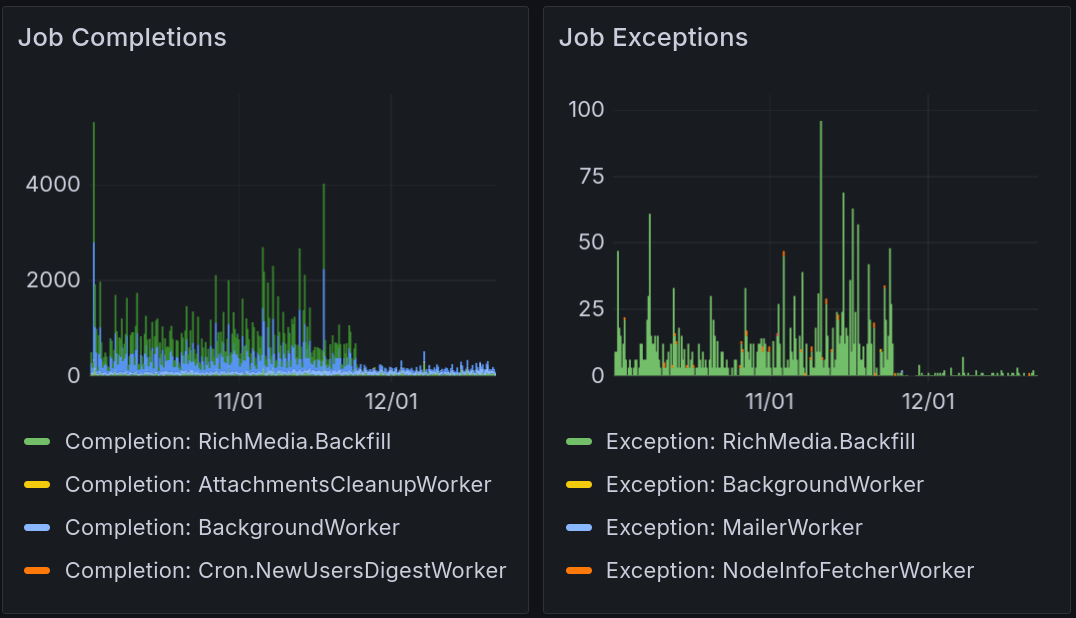

oban_jobsUPDATEquery also dropped; not only from running fewer queries, the cost of each individual query also dropped to a third presumably due to less bloat from deleted rows (oban tables are regularly reindexed by default).As for jobs other than Receive-Fetch-Publish (RFP), the decrease is also well visible (note there are several hues of blue used to represent jobs and not all indicators shown in the image; the bulk of the jobs prior to patching were SearchIndexingWorker and NodeInfoFetcher, not RichMedia nd Background):

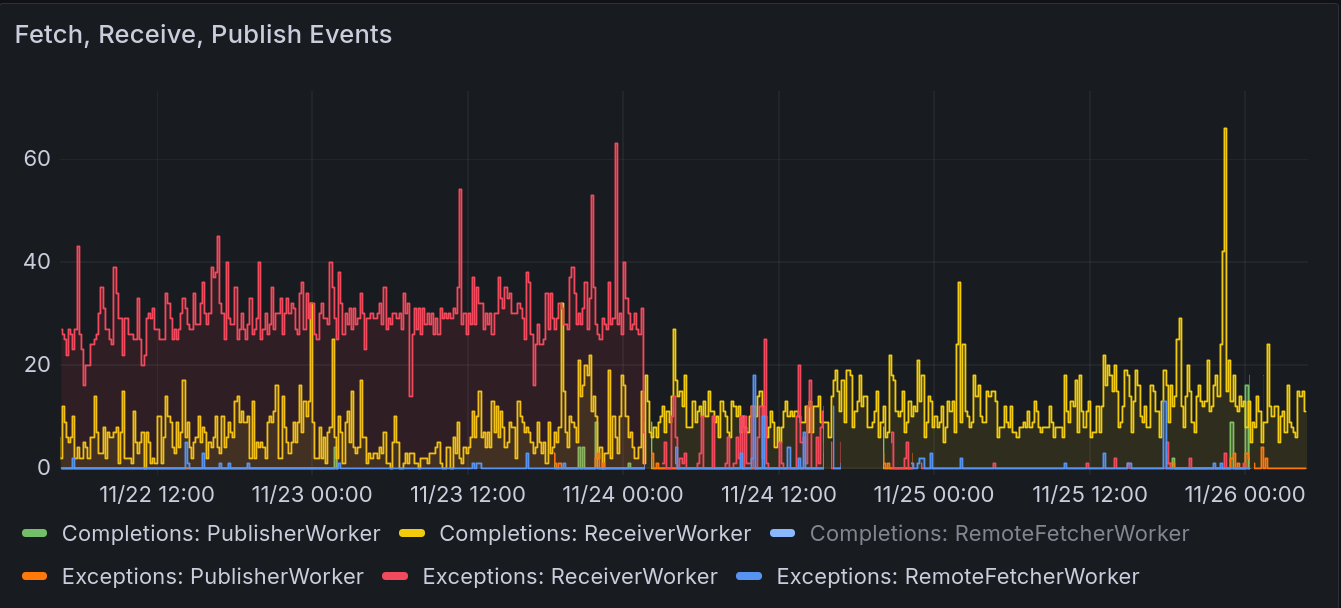

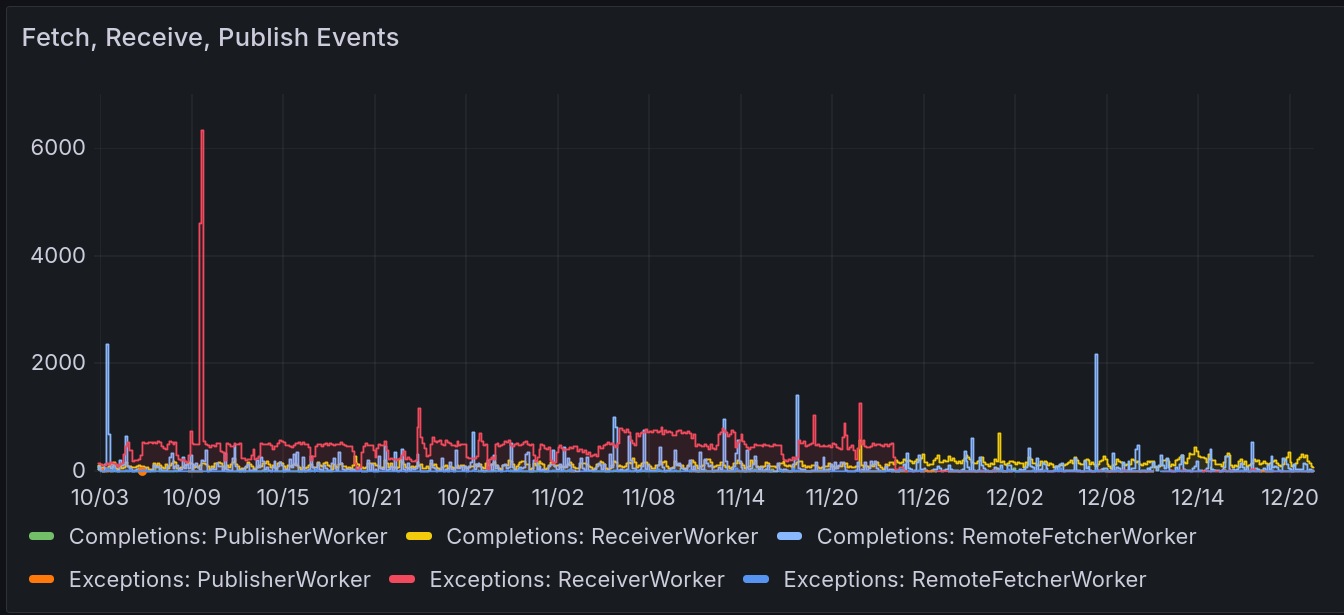

As for RFP jobs, there used to be a constant background of failures, exceeding completions

See also the huge failure spike arising from an user account sending out deletes for almost all posts, barely any of which are known locally and thus reprocessed 5 times:

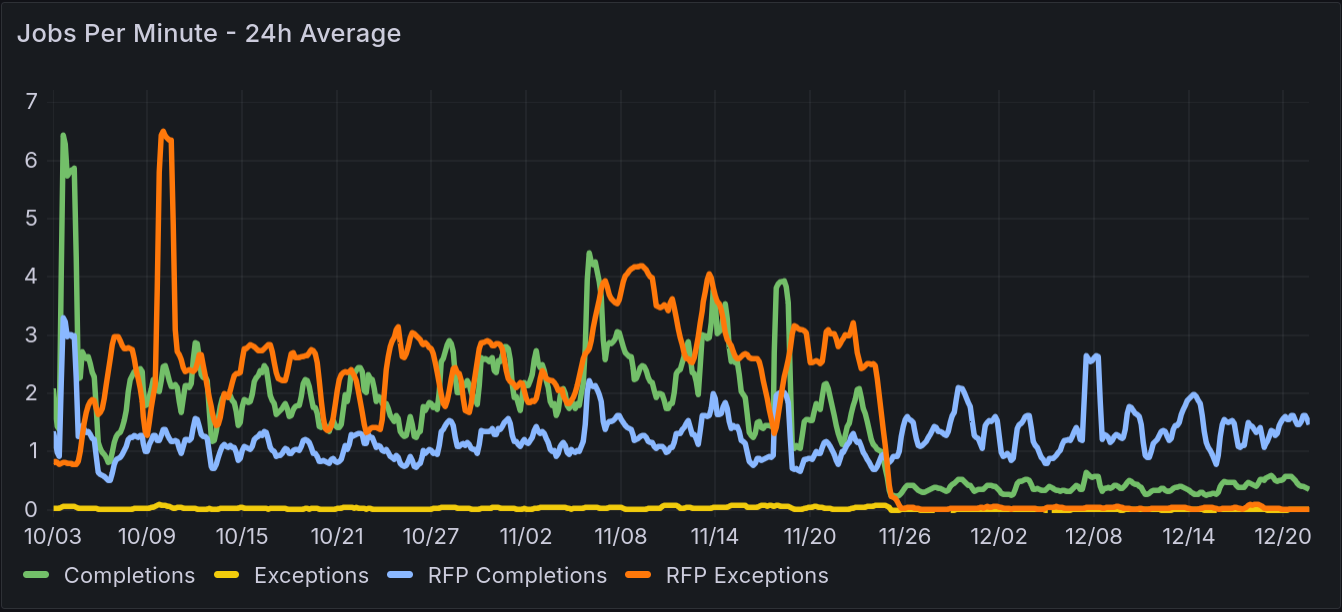

And the average job count per minute:

Everything combined also measurably decreased time spent on db actions on this small instance. Spikes in total time without a proportional rise in exec time are from a request flood causing many queries to stay in queue for a while:

Comparing the averages of the 48h averages (ofc the two may differ due to unpredictable federation workload, but both time intervals span several weeks and i think the difference is clearly greater than expected from background fluctuations alone):

Future outlook (things out of scope for this PR)

The main issue and last thing i did was graceful handling of

Deletes.It’s awkward to do in the current transmogrifier,validator,side_effect pipeline.

Ideally we’d want to look up the object only once and pass it along, instead of refetching it.

The check for orphaned activities is also awkward and given Activity queries are never cached thus more costly

I’m personally in favour of just dropping this and strongly recommending all

prune_objectsto be followed by or directly including an activity prune.Notably missing is skipping past duplicate user

Deletes;there’s even a test to ensure deletes of supposedly-deleted users are reattempted

since atm,

is_activeis immediately set tofalse, but cleaning p posts etchappens afterwards in a job which might fail.

Spurious user

Deletes are also common though, in fact my instance was hit by a buggy instancerepeatedly flooding the same user

Deleteevery 5 minutes for months until the remote admin fixed itand i’ve heard others be similarly affected by other instances of the same software.

Fixing this prob requires changing the deletion status to a tri-state

active,delete_in_progress,deletedand db migrations so i omitted it here.

Another future improvement would be to improve handling of duplicate inserts

which now appear to be the most frequent cause of

:link_resolveretries.E.g. when a followed remote user replies to and likes a post, the parent will be fetched

when processing the received reply, but also needs to be resolved when receiving the Like.

If both arrive nearly simultaneously it’s possible both start fetching (annoying remote servers with duplicated requests), but only one wins and the other errors out. If the Like looses, it will log a message

and the job will be retried.

Also the retry backoff for :rate_limit errors should ideally use the rate limit reset

info sent by the remote. Our current receiver retry backoff is far too short for this

and most likely just all retries will run into a rate_limit error.

It also doesn't do anything about the high insertion cost for

activities(on average 1000µs per insertion here; for

objectsit’s 290µs and for saner tables about 1-90µs)1c276f36d5to870d15de12870d15de12tocac7ab8bc0rebased to resolve the CHANGELOG conflict and added a new small commit to also log any crashes occurring during ReceiverWorker handling.

Nothing problematic happened since posting the patches.

Occasionally (like about once a day) there’s an odd failing job due to a missing object which should be impossible due to the prior checks added here, but this might come from some cache weirdness (cache changes don't obey transaction isolation)* and/or race condition. Usually those errors disappeared on retry. During the whole time so far only two

Update*(couldn't find the object to Update in later stages, eventhough initial checks found it) and oneDelete(trying to Delete an actor-less tombstone in later stages, eventhough initial checks for anactorfield succeeded) activities had persistent failures.Whatever it is almost certainly is a pre-existing thing though, since the problem always happens in parts not touched by this PR and evidently passes barriers set-up in this PR. Thus I don’t think they are blockers for merging this.

will stage this against current develop and run it on ihba for a while, will release as part of a patch in a week or two if it's all gud

cac7ab8bc0toca1681e224ca1681e224tob441251aedfor reference: after discussion on IRC, we suspect the

nilinbox issue addressed by 2025.01.01 resulted from an old OStatus user account never upgraded to AP and the removal ofap_enabledhere.AP requires actors to have an inbox and outbox and since a few versions we explicitly reject users without an inbox and (unintentionally) crash on explicit-

nilinboxes while parsing the user, effectively rejecting those at well.If true, this can only affect instances old enough to have started out with OStatus support still present and who never received anything from a follower triggering a user refresh (possibly because the remote died before adopting AP)

While it wasn’t linked to the removal of

ap_enabled, Pleroma experienced the same issue some months after releasing the removal ofap_enabledand applied a very similar fix: https://git.pleroma.social/pleroma/pleroma/-/merge_requests/4083There were no further

nilinbox patches in Pleroma afaict, making it seem unlikely this causes any further problems.The inbox value should automatically be updated on the next user refresh if they now support AP. If desired we could just leave it at the already deployed fix, or try to refresh all

ap_enabled=falseusers already during DB migration, but the existing fix needs to remain to deal with dead or temporarily unavailable remotes. Imho "just waiting for the next natural refresh" is good enoughAnd for now treat partial fetches as a success, since for all current users partial collection data is better than no data at all. If an error occurred while fetching a page, this previously returned a bogus {:ok, {:error, _}} success, causing the error to be attached to the object as an reply list subsequently leading to the whole post getting rejected during validation. Also the pinned collection caller did not actually handle the preexisting error case resulting in process crashes.Added another fix for preëxisting bugs related to collection fetches + two unrelated small fixes here since the exact commit likely depends on prior changes here anyway and there’s little point in untangling it just to deal with merge conflicts later.

94738f29cctof0a99b4595i have indeed been running this patch locally sincei last commented, with no issues noted